Archive

Dell One Identity Manager 7.0 Rollup Package 1 released

Dell Software just released the first rollup package for Dell One Identity Manager 7.0 which comes with a bunch of resolved issues as well as a couple of cool new features. Features i’ve been waiting for due to customer issues:

- Support for encrypted emails using TLS, S/MIME and PGP

- Reading and Assigning SAP security policies

- Support for Powershell v3 and later

- Transport of UNSRoot definitions including all necessary settings

- applied sort order of change labels during transports

- support for transporting Compliance Rules

But there are a couple of additional cool features included as well:

The REST API was extended to support additional capabilities such as calling methods, scripts, customizer methods and events as well as support for different collection load types. This makes the REST API a bigger part in the upcoming API economy in IAM / IAG. There have been some additional SAP HCM info types added to make the SAP HCM sync more powerful and to avoid additional programming effort.

The rollup package is currently available through Dell Support, not yet on the download page.

Seems like i have to use a night in the hotel to upgrade my testing environment this week.

Troubleshooting the D1IM LDAP Authentication Module

During a recent Go-Live with Dell One Identity Manager 6.1.2 we ran into some issues establishing the IT-Shop authentication leveraging the LDAP Authentication Module. I’d like to use this blog post to share all the know how we were able to gather and participate within the finding.

So here’s the setup we were using:

We had a custom D1IM 6.1.2 IT-Shop application being published to a set of Windows 2008 R2 based IIS web servers. The authentication had to established against Novell eDirectory 8.8 SP8 using LDAPS via Port 636.

This is the way it was intended to work out:

The user lands on the IT-Shop login page being asked to enter the username and password. D1IM looks up the LDAPAccount table for user, having the given user name. When found, D1IM grabs the Distinguished Name of the identified LDAPAccount object and uses this one in combination with the given password to do a login using the LDAPADSI bind functionality against the configured LDAP directory to authenticate the user. If the authentication was successful, the user will be logged into the IT-Shop using the Person object being connected to the LDAPAccount object.

Here’s how we approached the activation of the LDAP Authentication Module:

We went into Designer and activated the Dynamic LDAP Authentication Module and fed it with the configuration data, specifically the LDAP RootDN, the FQDN of the LDAP server (which initially was a F5-FQDN load balancing against all the LDAP servers), port number and the authentication method. Having this in place, we went into web portal configuration tool to activate the LDAP authentication module.

Then we were pretty much done… At least, we thought we were. Testing the LDAP based authentication ran into the first issue: in the IT-Shop log we saw the following error message:

Value RootDN is required for authentication

As we had the RootDN being configured into the module configuration we did cross check the current setting with the real RootDN of the LDAP directory and the domain object being established in D1IM finding no failure at all. So we opened up a service request with Dell Software Support.

Finding #1 was, that the IT-Shop is not able to leverage the configuration of the LDAP authentication module. Therefore, we needed a manual entry in the web.config as the configuration is not able to define the necessary key. Dell provided this information in a Kb article pretty quick. The KB can be found here: https://support.software.dell.com/de-de/identity-manager/kb/158022?kblang=en-US

So we put our LDAP configuration into the web.config and did a retest which ran into the next issue: looking up the IT-Shop log we now did find the next error message:

The server is not operational

So we did use the VINSProviderTest.exe to test login into the LDAP directory from one of our web servers. Using the LDAPNovell Provider, the login was all fine, using the LDAPADSI provider which the LDAP authentication modules is leveraging failed. So we had the intention that the LDAP authentication module is not compatible to work with a Novell eDirectory for LDAP authentication. So while mailing back and forth with Dell Support we did came to the conclusion that we would need a custom authentication module talking LDAPNovell for our customer, which would have been a massive challenge due to the limited till Go-Live.

But here’s the good news: it does work with Novell eDirectory for LDAP authentication if all the environment configuration is right.

As i mentioned, we did configure the F5-FQDN which is load balancing all the LDAP requests onto the LDAP servers in the infrastructure. Being asked by Dell Support, we were looking up the SSL certificate of the LDAP servers finding the issue, that the web servers did not have the Root-CA certificate being installed to trust the certificate at all. Installing the root certificate removed the warnings from the server certificates. But it did not help us authenticate. So we did check the certificate path of the server certificates next and recognized, that those were server specific but there was no certification path for the F5 loadbalancer. So in next step, we talked to the LDAP team if there was any chance to add alternate subject names to their certificates carrying the F5-FQDN to be leveraged by the LDAPADSI provider to authenticate successfully. But this approach was unsuccessful due to timing issues as well with regards to our Go-Live.

Dell did bring online a KB describing this issue as well which can be found here: https://support.software.dell.com/de-de/identity-manager/kb/176042?kblang=en-US

So we ran into the next workaround: connecting to one of the LDAP server directly bypassing the F5 Loadbalancer. But even this was unsuccessful. We were near to the goal but we still ran into the issue that the authentication was failing with the error message „The server is not operational“. What came now has nothing to with D1IM at all but we were not aware of what was coming next….

Being totally desperate, we got hand onto one the best Novell eDirectory engineers of the customers organization to do a LDAP trace while trying to login. What we saw was pretty upsetting:

SSLv3 handshake failed: no matching ciphers found

So we started looking into the security configuration. Based upon the security policy of the customer organization, the System Integrators did some hardening work on the IIS servers being used for the D1IM IT-Shop, disabling everything except TLSv1.2 and disabling weak encryption ciphers to comply with security policy. Next step in our troubleshooting procedure was an approach of one of our System Integrators, using the OpenSSL tool suite to test-drive the available SSL / encryption features being supported by the current instance of the Novell eDirectory. The result was pretty disgusting: SSLv3 and TLS1.0 but nothing more secure. So the LDAP engineers did open a service request with Novell, which ended up in being told that Novell eDirectory does not support TLSv1.1 and TLSv1.2. Such support will be available with Novell eDirectory 9.0 somewhat in 2016.

After getting an exceptional approval by the customers IT security office, we did lower the SSL / encryption level of our D1IM web servers. Since the we’re successfully authenticating against the customers Novell eDirectory.

So if you ever want to use the D1IM LDAP authentication module in the IT-Shop portal, there shouldn’t be any more you can run into regarding potential issues.

I also wanted to use this blog post to say „many thanks“ to Rene O. from Dell Support and Ralph G. from the Dell Development Department in Dresden who were not loosing the faith into D1IM and the authentication module till the end. I think we all learned a lot during the time we were dealing with this special service request.

First look onto EmpowerID

I’ve had the chance to get a demo on one of the .Net-based IAM tools earlier this week. I had the pleasure to get some insight on the EmpowerID product platform. As i do come from a legacy-Voelcker background, being shifted through the aquisition through Quest Software in 2010 and joining my current (vendor neutral – although we do have unique competencies for a couple of IDM / IAM / IAG tools) employer, i’m still working mainly with what is now Dell One Identity Manager, which completely based on the Microsoft .Net stack, as the EmpowerID suite is. There are a couple of things in common: both tools come with their very own database based meta-directory (EmpowerID support Microsoft SQL Server, Dell supports Microsoft SQL Server as well as an Oracle Database Server), both tools use the IIS server for their web applications, both tools do ship with a graphical workflow designer, both tools ship with a bundle of native connectors but are able to be extended using their API capabilities to program custom connectors against their extensible meta-directory. There are a couple of mor things in common betwenn D1IM and the EmpowerID suite, but there are also a whole bunch of differences between the two solutions approaching the same problem. The main issue at least for the european market for the EmpowerID suite is the missing SAP connector to provision into the SAP security stack. They do have a connector to provision Identities from or to SAP HCM natively, which Dell is currently missing as a native connector.

From what i’ve seen during that 90 minute demo, i’d like to get a demo installation of the EmpowerID solution suite to do some hands-on experiments discovering the tool. It looked pretty nice, pretty quick and pretty responsive although the majority of the configuration and administration is done through web interfaces.

Sailpoint addressing Data Access Governance

With the acquisition of Whitebox Security, Sailpoint is extending their portfolio into the emerging Data Access Governance market. The Whitebox Security suite will be rebranded and renamed into the Sailpoint product naming schema as SecurityIQ. The plan is to bring the identity information into correlation with the data centric view in the IT infrastructure to get a clear view to bring visibility into “Who has access to what, using which entitlement?”. According to the press release, SecurityIQ will be integrated into IdentityIQ and IdentityNow, Sailpoint might offer the same depth of integration between Identity and Access Management (IAM) / Identity Access Governance (IAG) and Data Access Governance (DAG) than Dell has with their Dell One Identity Manager and the Dell One Identity Manager Data Governance Edition, which was built on the foundation of the former Quest Access Manager. The market will stay heated up…

A D1IM programming snippet

There has been a discussion about an implementation detail within Dell One Identity Manager with two colleagues that came up during my family vacation and which i took on after being back at my desk Thursday this week. It all started with the simple question how to catch the event name that triggered a process. The initial answer was „there’s no way to get there“ but this answer is at least outdated. Sure there is a way to catch the event name in an D1IM process by using the EventName-property. So just in case you have a process that is raised by two different events but you want to have a process step being generated only for one dedicated event, the generation condition would look like this:

Value = CBOOL(EventName = “<Name of the Event>“)

Just wanted to share this, it might be a helpful snippet in the one or the other project implementation.

A must read by Ian Glazer (@iglazer)

One of the must-reads during my family vacation was the speech Ian Glazer gave at CIS 2015, titled „Identity is having its TCP/IP moment“. He’s talking about using standards based IAM. His conclusion (which i totally agree onto): not using standards is the wrong way. Ok, he’s expressing it with the phrase „the Banyan Vines of identity“.

He gave this as a speech without any slide deck. The speech can be read on his private blog: https://www.tuesdaynight.org/2015/06/09/identity_is_having_its_tcpip_moment.html

Ian also embedded a video recording of his speech for all of us, who are to impatient to read the whole text. But i do have to recommend to read the text at all, as it’s even more impressive than „just the recording“. Thanks to Ian for such a great speech.

Gartner Magic Quadrant for IDaaS (2015)

Early this month, Gartner released the 2015 edition of it’s Magic Quadrant for Identity and Access Management as a Service covering the worldwide market. The full report can be read here: Magic Quadrant for IDaaS (2015)

Gartner see’s Okta as the only vendor the Leader quadrant, while there’s a bunch of big names in the Visionaries quadrant, such as Microsoft, IBM, Ping Identity and Salesforce. I’m a bit surprised by the fact that Centrify made it into the Visionaries quadrant as well, I would have seen them in the Niche Players quadrant. But that might be due to the fact that I’ve a European focus.

Microsoft going to deprecate IDMU-features in Windows Server

With this blog post, Microsoft is announcing to deprecate the Identity Management for Unix features in Windows Server, starting with Windows Server 2012 R2. Affected features are:

- the UNIX attributes tab in the User and Computers (dsa.msc)

- NIS (Network Information Service)

- RSAT (Remote Server Administration Tools)

In the comments of the blog post, a member of the Active Directory Documentation Team made clarification that the Active Directory schema will not be touched. So extensions in place will stay as well as the data stored in these attributes.

- Microsoft made the decision to deprecate their features and to recommend using alternative tools for managing Unix attributes and features using Windows Server.

Installing and Configuring ForgeRock OpenDJ on Windows

Unfortunately I’m currently out of business while being home sick. But this gives me the chance to get hands-on on some tools I have on my list to discover them during this year. The first of them is the OpenDJ LDAP Server of ForgeRock.

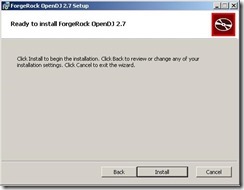

I’ve used the nightly build of OpenDJ 2.7 and ran through the MSI installation wizard first.

For the initial shot I’ve used the default installation directory.

The installation is done pretty quick. What I’m missing here is to start the configuration wizard directly out of the installation wizard. @ForgeRock: Maybe this might be a good add-on to future releases of OpenDJ.

To start the graphic installation you’ve to execute the setup.bat file located in the installation directory without any additional command line parameter.

This I the upcoming configuration wizard.

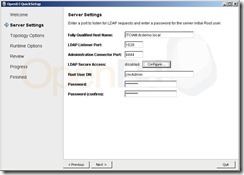

The first configuration screen comes up with the FQDN of the server we’re currently running on, asking for the LDAP Listener Port (default 389, my configuration will be 1028 as there are other LDAP servers already running on my current machine). I’ve left the administration port at 4444 as I’ve no service bound on this port yet.

For testing purposes I’ve not configured LDAP secure access yet, I’ll add that in a later blog post.

Last step on this configuration screen is to define the root user DN and password for the administration account.

The next screen is to configure replication if needed. I’m planning to set up a Linux server in parallel hosting a OpenDJ LDAP Server as well and to have it replicating with my current server. So I’ve left the default replication port, configured it as secure but left the replication information empty as this is my first OpenDJ LDAP Server so far.

Next configuration step is the definition of the Directory Base DN. I’ve chosen

dc=corpdir,dc=local

for this initial shot (you might see different DNs in later blog posts).

There are some options to load data initially using a LDIF file or to import sample data for testing purposes as well. I’ve decided to just create the base entry so far and to set up the remaining LDAP structure later on.

The next screen is to define specific Java runtime options. I’ve used default here.

The next screen allows to review all settings before finishing the installation.

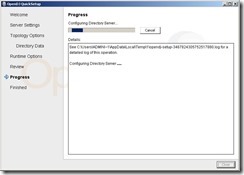

The configuration wizard is now taking care of creating my LDAP instance as I’ve configured it in the screens before.

In the end we do have a running instance of the ForgeRock OpenDJ LDAP Server. Pretty simple, isn’t it?

PoCs – are they really an inefficient use of time and money?

This blog post is meant to be an answer on one of the latest blog posts of Identropy, „IAM Proofs of Concepts (POC) – An Inefficient Use of Time and Money“, written by Luis Almeida (btw: congratulations and all the best for your new job). It took me a while to gather all my arguments to reply to this blog post.

The first argument that Luis brings up, is that PoCs are costly and deliver very limited value.

The cost effect is an interesting one. Typically (at least for the majority of PoCs i did in my career), costs are beared by the vendor or integrator that is attending the PoC at the customers site. It’s in the interest of the vendor or the system integrator to show capabilities of their product within the customers environment. This is why most vendors or system integrators do not charge their potential customers for PoC attendance. The only cost effect (and i do agree that this should not be underestimated) on the customer site is to define the PoC scenario and to establish an lab environment to do the PoC in. Typically there are already testing environments beside the production network of the potential customer where development effort and software testing is driven before moving IT systems to production. The PoC scenario should already be defined (at least in parts) by the daily processes of the potential customer that are used to onboard, update or offboard employee identities. If the customer is a green field customer (having no legacy IDM solution in place), the approval processes are mostly kept in request forms that are available in the potential customers intranet or as printouts that can be leveraged to gather the currently used information for request fullfilment. I’ve also experienced PoCs where it was part of the PoC to demonstrate the conceptual approach of migrating manual process into an request driven, automated workflow in order to let the customer gain some inside on the used approaches and the way they are implemented into the solution.

The delivery of limited value is a bit tricky. For sure, the teams sent by vendors or integrators to do PoCs are mostly pure pre-sales consultants with a dedicated skill set in order to deliver a successful PoC in order to sell the product. Mostly, but not every time. In smaller organizations PoCs are delivered by project proven consultants and architects. Depending on what background the PoC delivery team of the vendor or integrator has, PoCs do deliver different outcome. It should be a duty of the vendor or integrator to set up a team of pre-sales consultants as well as professional services consultants to not only demonstrate the solution but also make the customer aware of potential pitfalls, arising complexity of the solution that is going to be implemented but also identify strategies to mitigate the risk that is about to be affect the upcoming implementation project.

The next argument that Luis brings up is the handover of responsibility from the sales / pre-sales team to the services team after the PoC in order to deliver a successful project.

Indeed, this is the most complex part of the transition of an IAM implementation project. Information might get lost, the SOW that is created by the pre-sales team might not fit into the customers requirements and the services team might get caught in an financial frame that is to tight to do the project in a safe way. But there is an solution out there to solve this problem: Integrate the services organization into your pre-sales cycle as soon as possible. What’s that good for? They can assist the pre-sales consultants onsite during the PoC by bringing in real life experience instead of pure trained pre-sales knowhow of how to achieve goals in a short amount of time to get the PoC scenario done. They services organization can also assist in creation of the SOW by bringing in real life experience from ongoing or former implementations and to justify potentially higher project cost by their experience of with pitfalls, complexity and data driven issues of implementation projects.

The third argument that is brought up by Luis is the significant opportunity to gain better outcome of an product evaluation phase by engaging a services partner. I totally agree to this approach as this allows the customer organization to focus on their needs and living processes while having partner in place that is dedicated to the IAM implementation and the search for the perfect product for the customers situation, their architecture and their team. The only thing i do have to mention for this scenario: are service provider or solution integrators really unbiased? They a probably a bit more unbiased than a vendor sales and pre-sales team, but not completely unbiased as they are typically partnering with a couple of vendors to distribute and customize their solutions. From a customers perspective, the best approach would be to engage a service provider for the product evaluation phase and the definition of use cases and requirements, but to have another service provider or solution integration, maybe the the vendor itself doing the project implementation.

The last argument that comes up in Luis’ blog post is „If POCs were an effective means to evaluate IAM solutions, there would not be so many failed implementations in the market.“.

For sure, there are failed implementations out there that came through PoCs and a suboptimal handling of the transition from the sales cycle into the implementation phase executed by services organizations. The implementation might also fail if the product evaluation was done by a service provider as there are so much factors that can be a source for project failure.

As an final conclusion out of both blog posts, i think we do agree in the fact that things have to be changed around the product evaluation process. There have to be things changed on the customer side as well as on the side of vendors or system integrators. And last but not least: this does not only affect IAM projects.